Acharya Narendra Dev College/Linux Cluster Laboratory

Contents

- 1 Learning through Open Source: Our experience building a GNU/Linux-based cluster

- 2 Abstract

- 3 Aim

- 4 Motivation to get us involved

- 5 Receipt of Machines

- 6 Upgradation of Machines

- 7 Initial Cluster Machines

- 8 Cluster Operating System: Phase 1 - Turnkey Solutions

- 9 Cluster Operating System: Phase 2 - Customised Solutions

- 10 Software Installed

- 11 Parallel Programming

- 12 Further Work

- 13 Our Take

- 14 Links

- 15 References

Learning through Open Source: Our experience building a GNU/Linux-based cluster

Mentor:

Dr. Sanjay Kumar Chauhan

Batch-2009

Ankit Bhattacharjee, Animesh Kumar, Sudhang Shankar, Arjun Suri

Batch-2010

Amit Bhardwaj, Abhishek Nandakumar, Priyanka Beri, Manu Kapoor, Ajaz Ahmed Siddiqui, Reyaz Ahmed Siddiqui

Abstract

A computer cluster is a flexible, inexpensive and simple way to construct an architecture for developing and deploying parallel programs. This paper describes the construction of a cluster at ANDC using discarded hardware and a suite of free software. It is our position that it enabled the members of our team to learn about an interesting field which wasn't part of the normal educational setup at DU in a hands-on approach, something which would have been impossible without the culture of freedom. Furthermore, we feel it gave us a sense of appreciation free software philosophy and convinced us of how it is superior to the closed "black-box" approach adopted by proprietary software companies. In fact, we also feel that the free culture can and should be adopted by other fields, particularly education. Lastly, we recommend free software and projects utilizing free software to other colleges that haven't yet "seen the light"!

Aim

- To build a cluster

- To effectively reuse discarded hardware and understand hardware upgradation.

- To learn more about clustering, parallel programming and related fields

Motivation to get us involved

The cluster project started through a discussion between the Principal of ANDC, Dr Savithri Singh, and the Director of OpenLX, Mr Sudhir Gandotra. During and after Linux workshop in 2007 Dr Singh inducted Dr Sanjay Chauhan from Physics department in the cluster project and somehow, a kernel of students got involved in the project. While they were all enthusiastic, the project was very challenging, the challenges being of two sorts: Technical, especially the reclamation of the to-be-junked hardware, and Human, mostly relating to the lack of experience and know-how of the players. Of these, the latter was especially hurtful, since it cost significant man-hours spent on suboptimal and downright incorrect 'solutions' that could have been avoided had the team been slightly more knowledgeable. This was propounded by the fact that all this had to be done in the team members' "spare time" which was often as little as half an hour a day.

The main team members at this point were:

- Ankit Bhattacharjee

- Animesh Kumar

- Arjun Suri

- Sudhang Shankar

They were led by Dr. Chauhan. A number of other students had become involved at first, but were unable to accommodate the demands of the project and hence could not commit to it. After a few months, we were joined by some more students:

- Abhishek Nandakumar

- Ajaz Ahmed Sidiqqui

- Amit Bhardwaj

- Manu Kapoor

- Priyanka Beri

- Reyaz Ahmed Siddiqui

Receipt of Machines

The project officially started when the team was presented with 20 decrepit machines of which about 5 worked immediately. Many of those that did required significant upgrades to be worth deployment in the cluster.

Initially 12 computers were transferred from previous computer labs of the college to the cluster lab. The specifications of the machines is as follows -

| S.No. | Item | Serial Number | Reason |

|---|---|---|---|

| 1 | CPU, PII-350, 128MB | 01990C72167 | Motherboard burnt, beyond repair |

| 2 | CPU, PII-350, 128MB | 0N90C73782 | Motherboard burnt, beyond repair |

| 3 | CPU, PII-233 | 1NOMC9838490 | Motherboard burnt, beyond repair |

| 4 | CPU, PII -233 | 1NOMC9837974 | Mother board faulty, SMPS faulty, optical drive faulty, HDD faulty., beyond repair |

| 5 | CPU, PII -233 | 1NOMC9838494 | Mother board faulty, SMPS faulty, optical drive faulty, HDD faulty., beyond repair |

| 6 | CPU, PII -233 | 1NOMC9838448 | Mother board faulty, SMPS faulty, optical drive faulty, HDD faulty., beyond repair |

| 7 | CPU, PII -233 | 1NOMC9838495 | Mother board faulty, SMPS faulty, optical drive faulty, HDD faulty., beyond repair |

| 8 | CPU, PII -233 | 1NOMC9838477 | Mother board faulty, SMPS faulty, optical drive faulty, HDD faulty., beyond repair |

| 9 | CPU, PII -233 | 300PAM016086 | Mother board faulty, SMPS faulty, optical drive faulty, HDD faulty., beyond repair |

| 10 | CPU, PII -233, 16 MB | 09980C53266 | Mother board faulty, SMPS faulty, optical drive faulty, HDD faulty., beyond repair |

| 11 | CPU, PII -233 | B980065165 | Mother board faulty, SMPS faulty, optical drive faulty, HDD faulty., beyond repair |

| 12 | CPU, PII -233, 16 MB | B980063912 | Mother board faulty, SMPS faulty, optical drive faulty, HDD faulty., beyond repair |

In addition to these machines, 15 other machines were given that had been dumped into the storage room.

Upgradation of Machines

Since the machines with which we had to build a cluster were very old, we had to upgrade them all to a usable state. This was the lengthiest stage of the project.

The difficulty of the maintenance issue was propounded by the often conflicting time constraints under which the members had to work. Over a period of 5 months, 12 could be repaired while the rest were discarded after "scavenging" useful parts from them for use in the future and in the salvageable machines.

RAM

Since a fairly "impressive" cluster needed to be at least visibly fast to the lay observer, the machines had to be upgraded in RAM in order for the system to use fairly modern base GNU/Linux distributions, and not to become the source of a bottleneck in the cluster. One issue that we faced with this was that we could not simply buy the latest RAM modules from the market, since those would have been newer DDR2 modules, while the machines could only support older modules. Some machines could not be used at all, since they could only use long-obsolete DRAM slots, which were not only very low in capacity, but also very hard to find. We decided that it was best to give up on these machines, and instead use the "good" parts of such machines (hard disks, CDROM drives, wires) for upgrading other machines. So, 25 256 MB SDRAM modules were purchased and multiples of these were put in all working machines.

Optical Drives

In most machines that we received, the CD-ROM drives were non-functional. We tried long and hard to repair them, but met with no success. Ultimately, we gave up and bought CD-ROM drives.

Hard Disks

Many of the machines that we bought had obsolete hard disks (of capacities ranging from half to four GB). Obviously, this would not be nearly enough for any real world data. Moreoever, it would not be possible to install a modern Linux distribution on such machines. With this in mind, we set about buying hard disks. We were forced to limit out purchases to 20GB disks, because Pentium-2 does not support greater capacities. We ended up purchasing 5 such 20 GB disks.

Miscellaneous Purchases and Acquisitions

To set up the physical layer of the computer network, we purchased a crimping tool, which we used for attaching RJ-45 connectors to ethernet cable. We also bought a network switch. Each node of the cluster was connected to the switch through one of the crimped cables. Thus, by connecting all of the machines together, a network of the machines was set up.

We arranged for electrical wiring and fitting to be set up, and two new boards with 42 sockets and 6 switches were installed. As a power backup solution, we had two online 5 KVA UPSes installed.

We also bought a pack of CMOS cells for the machines. In addition, we acquired a pack of 100 CD media, screwdrivers, switches for the power buttons of the computers (before this purchase, we had been booting and shutting down some machines by shorting the power wires by hand)

12 machines were finalised after all the upgradation was finished.

Initial Cluster Machines

4 of these 12 machines have been joined for the "first" cluster. We decided to start with a small cluster, and add machines to the cluster as our understanding progresses.

These machines were given names as per the television show "The Simpsons". The following is a table of their hardware specifications:

| Name | RAM (in kB) | Hard Disk Capacity (in GB) | CPU type | Network Card |

|---|---|---|---|---|

| Homer | 254540 | 20.4 | Intel Pentium II | Compex ReadyLink 2000 |

| Lisa | 514180 | 20.5 | Intel Pentium II | Compex ReadyLink 2000 |

| Apu | 254540 | 20.4 | Intel Pentium II | RealTek Semiconductor 8139 |

| Marge | 254472 | 20.0 | Intel Pentium III | RealTek Semiconductor 8029 |

Cluster Operating System: Phase 1 - Turnkey Solutions

After the first few machines had become usable, it was felt that the members lacked the requisite knowledge to implement changes at kernel level. Therefore, a decision was made to use a "turn-key" solution. These were generally in the form of Linux distributions with all the required tools pre-packaged, which we felt would make our task easier.

clusterKnoppix

We first homed in on the clusterKnoppix distribution. clusterKnoppix was a Linux liveCD (a liveCD is a CD containing and operating system, that can run right off the CD, without needing to be installed onto the hard disk of the sytem) that used a kernel patched with openMosix.

openMosix was a clustering system (based on "Mosix" from the Hebrew University of Jerusalem), that extended the Linux kernel in such a way that processes could migrate transparently among the different machines within a cluster in order to more evenly distribute the workload. clusterKnoppix's premise was that it provided a "single system image" (SSI), i.e. it worked like a single OS for the whole cluster. Processes from one machine could be automatically transferred to another machine if clusterKnoppix thought it would be faster on the new machine. We were keen on using clusterKnoppix for the following reasons:

- It provided a liveCD environment, which would ostensibly make setting up a cluster a matter of minutes

- Unlike other systems, it would let us work without having to learn parallel processing

- It provided a number of GUI (Graphical User Interface) tools for visualising the cluster

- It also provided a number of science-based benchmark tools.

However, clusterKnoppix turned out to be the wrong decision for us, though we only found out after lots of frustrating trials. The problems we faced were:

- About a month into the project, we found out that the openMosix project was about to be shut down. The reason for this was that the developers felt that SSI systems were on their way out, thanks to the burgeoning of multi-core architectures that would popularize threaded programming (similar to parallel programming)

- Regardless, we tried to continue with clusterKnoppix, even though it had not been updated for a while

- Also, we had a number of incompatibilities with various device drivers (mainly for netcards). These were hard to diagnose as they were not immediately visible, and none of us had had any experience with Linux administration.

In this time, we had also tried a number of similar solutions like PCQLinux, BCCD and Quantian. All these failed due to much the same reasons.

ParallelKnoppix

After these attempts, it was decided to work with a beowulf distribution, since it was felt that the complexity of the other solutions was a significant roadblock to success. We found a suitable distribution called parallelKnoppix, developed and maintained by Michael Creel, an econometrics professor teaching at the Universitat Autonoma de Barcelona. parallelKnoppix is a Linux liveCD based on the Knoppix distribution that features the KDE desktop environment, a number of parallel programming libraries and tools and a series of tests based on the subject of Econometrics.

Note: As of now, the parallelKnoppix project has been scrapped and replaced with pelicanHPC, a similar project based on Debian Live. It is also developed by Michael Creel.

Using parallelKnoppix was simple:

- One would place in the CD in one machine ("the master node") and boot it

- Then, run the setup script provided by parallelKnoppix

- The other machines were configured to boot from LAN, an option that was set in the system BIOS. On starting these machines, the machines would copy essential software to memory from the master node over the LAN. Thereafter, a program made to run in such a cluster (using parallel programming libraries like MPI) would run on as many machines as were turned on.

We faced a problem with this: while this could work with new machines which had newer ethernet cards that supported the PXE (Preboot eXecution Environment) technology. None of the machines in our cluster had such cards. We were faced with the following possibilities:

- We could purchase new LAN cards: this would have been a very expensive proposition as 6 new LAN cards with PXE boot support would have to be purchased, and there was no guarantee that it would work

- We could purchase an EPROM programmer to burn a PXE ROM image (from rom-o-matic.net) onto an EPROM. We could then plug the programmed EPROM into the old network card and use it just like a new PXE-enabled one.

- The third solution, which we ended up using, was to get CDs of Etherboot, place them on all the "slave" machines and boot them. This worked just as well as having the right cards. Etherboot implements the above-mentioned PXE standard. To use it with ParallelKnoppix:

- Boot ParallelKnoppix in one of the machines. Set it up as mentioned above.

- Connect another machine to the above one by a network cable, and set it to boot from CD-ROM, by choosing an option in the BIOS setup menu.

- Place the Etherboot CDs in the CD-ROM drives and reboot the machine

- When the machine reboots, it will first boot up the Etherboot image. Etherboot will then pick up the parallelKnoppix image from the other machine, through the network.

Thus, one will have a cluster of the two machines.

This did not work immediately on our cluster. ParallelKnoppix (on the master node) somehow failed to recognise our slave nodes. After a few days of research, we fixed the problem by configuring the master node's setup script to boot other nodes without acpi. The other machines were now set up and booted successfully. We were able to begin parallel programming on our cluster.

We did go on to face some more problems, but they were mostly superficial and minor, being resolved in a day or so. This solution, thus, gave us our first cluster. It was still a turn-key solution, since all the configuration happened automatically, but it does present a cluster that very closely resembles what one would develop on one's own. We highly recommend this approach for people who:

- Are less concerned with the systems aspect of clustering and more with the programming aspect.

- Are pressed for time

- Want a safe and simple way to convert their available Networks of Workstations (NOWs) into clusters in an ad-hoc way

- Are only curious about clustering, and don't want to commit to a bigger clustering project.

After becoming a little familiar with parallelKnoppix, we developed presentations for a workshop on the cluster [Link 1] parallel programming[Link 2] which met with some level of success.

Cluster Operating System: Phase 2 - Customised Solutions

parallelKnoppix was an ad-hoc solution we used to experiment with the process of building a cluster. It was very convenient as it used a liveCD environment. As we became more familiar with parallelKnoppix and working of what we required, we decided to develop our own solution using the 4 upgraded machines we now had.

After the decision to "grow our own" cluster, we took the following steps for setting up the cluster:

OS installation

Installed Ubuntu Server (a flavour of Linux) on all these computers. We chose Ubuntu because:

- Every member of the team is familiar with its working

- It has a very good package manager, with many packages available

We chose Linux because:

- It is open source and also free of cost (installing commercial OSes on multiple machines can be prohibitively expensive)

- All significant high-performance-computing systems are available for Linux

- A lot of documentation for Linux-based clusters is available on the internet and in books

Address Assignment

IP (version 4) addresses were assigned to each node of the cluster, through /etc/network/interfaces (this only works for Ubuntu and other Debian-derived distributions).

- An IP address is a number assigned to each computer's or other device's network interface(s) which are active on a network supporting the Internet Protocol, in order to distinguish each network interface (and hence each networked device) from every other network interface anywhere on the network. (Normally written out in dotted decimal form, e.g. 127.242.0.19)

- /etc/network/interfaces is a file which contains network interface configuration information for the both Ubuntu and Debian Linux. This is where one configures how your system is connected to the network. The addresses assigned were:

| Name | IPv4 Address |

|---|---|

| Homer | 192.168.1.1 |

| Apu | 192.168.1.2 |

| Marge | 192.168.1.3 |

| Maggie | 192.168.1.4 |

| Lisa | 192.168.1.5 |

Assigning Names to Machines

Edited /etc/hosts in each node, and add the IP addresses and domain names for each of the machines (including itself)

- /etc/hosts is called the "Host File". Since it is difficult to refer to machines by IP addresses, we use hostfiles to assign easy-to-remember names ("hostnames") to each machine, by which we can refer to them. This file maps hostnames to IP addresses.

- The hosts file is used as a supplement to (or a replacement of) the Domain Name System (DNS) on networks of varying sizes. Unlike DNS, the hosts file is under the control of the local computer's administrator. We felt no use for setting up a DNS server for our small cluster, so we simply relied on the hosts file.

Setting up NFS

Network File System (NFS) is a networked file system protocol originally developed by Sun Microsystems in 1984, allowing a user on a client computer to access files over a network in a manner similar to how local storage is accessed.

- Made a /mirror directory in every node of the cluster, by running the command (as the root user)

# mkdir /mirror

- Shared /mirror across all the nodes, through the master node, by editing /etc/exports and adding the line:

/mirror *(sync,rw,no_root_squash)

The file /etc/exports serves as the access control list for file systems which may be exported to NFS clients. The no_root_squash option is necessary, otherwise one might have a "permission denied" error when trying to create a file in /mirror

- Now, on the master node, ran:

# exportfs -ra

The exportfs command makes a local directory or file available to NFS clients for mounting over the network. Directories and files cannot be NFS-mounted unless they are first exported by exportfs. The -a option exports all directories listed in /etc/exports

- Finally, restart the nfs server:

#/etc/init.d/nfs-kernel-server restart

- Now, on each slave node, run:

#mount marge:/mirror /mirror

The mount command instructs the operating system a file system is ready to use, and associates it with a particular point in the system's file system hierarchy "marge:/mirror" specifies that the remote directory of interest is the /mirror directory on "marge". the second argument says that the local directory on which to keep the data is /mirror

- Also, added the following to /etc/fstab (on all slave nodes):

marge:/mirror /mirror nfs rw,hard,intr 0 0

The fstab file typically lists all available disks and disk partitions, and indicates how they are to be initialized or otherwise integrated into the overall system's file system

Adding a User and setting Ownership

- Added a user 'mpiu' on all nodes using the command:

#adduser --home /mirror/home/mpiu mpiu

(note, this command works only for debian-based systems)

- Changed the ownership of /mirror to mpiu (on each node):

#chown -R mpiu /mirror

Setting up SSH

SSH was set up (with no passphrase) for communication between nodes:

After logging in as mpiu, using

#su - mpiu

a DSA key for mpiu was generated.

#ssh-keygen -t dsa

[Pressed enter when asked to enter the filename. Also, left the pass phrase blank]

This key was added to authorised keys, using:

#cd .ssh #cat id_dsa.pub >> authorized_keys

SSH was tested on each node:

#ssh <other node name> hostname

Installing MPICH2

- The system date and time were checked:

#date

- The system clock was set to the correct time (since it was found not to be correct in the last step):

#date MMDDhhmmYYYY

- An mpich2 directory was made:

#mkdir /mirror/mpich2

- The mpich2 tarball was downloaded from the mpich2 site and extracted:

# tar xvzf mpich2-1.0.1.tar.gz

- After entering the mpich2 directory:

#cd mpich2-1.0.1

- The source code was compiled:

# ./configure --prefix=/mirror/mpich2 --with-device=ch3:sock #make #sudo make install

- In the code above it was necessary to use --with-device=ch3:sock to force TCP sockets based communication for the correct functioning of mpich2.

Mpich2 settings

- After successfully compiling and installing mpich, the following lines were added to "/mirror/mpiu/.bashrc/"

#export PATH=/mirror/mpich2/bin:$PATH #export PATH #LD_LIBRARY_PATH="/mirror/mpich2/lib:$LD_LIBRARY_PATH" #export LD_LIBRARY_PATH

- BASH (the Bourne Again SHell) was updated with the new contents of the bashrc file, using

# source ~/.bashrc

- Next MPICH installation path was defined to SSH:

#sudo echo /mirror/mpich2/bin >> /etc/environment

Testing Installation

The installation was tested to see if all the software had been installed

#which mpd #which mpiexec #which mpirun

The output of these commands gave the paths to the corresponding executables

Setting up MPD

Created mpd.hosts in mpiu's home directory with nodes named:

marge apu bart homer

and ran:

#echo secretword=something >> ~/.mpd.conf #chmod 600 .mpd.conf

Ran the following commands to test MPD:

- To create an mpd session:

#mpd &

The output was the current hostname.

- To trace working nodes

#mpdtrace

- To exit from all mpd sessions

#mpdallexit

After this, mpd daemon was started:

#mpdboot -n <specify number of nodes> #mpdtrace

The output was a list of names of all nodes.

Running an Example Program

To test the cluster, a sample program (integration algorithm for calculating the value of pi) was executed:

#mpiexec -n <number of nodes> cpi

Performance Evaluation

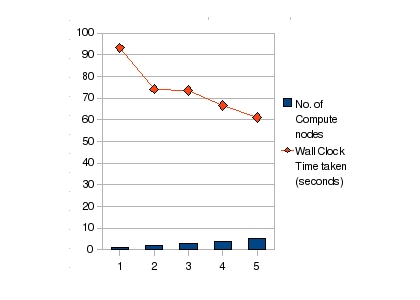

To test the gains from running the programs in parallel, a sequential version of the dartboard algorithm for calculating the value of pi on a single node was executed, and the time it took to execute was noted using the unix "time" program

time ./sequential-pi

This output the wall-clock time, user CPU time and system CPU time taken by the program.

Next, the parallel version of the program was run, first on 2 nodes, and the time was again noted, using:

#time mpiexec -n 2 mpi-pi-a.c

This was done for 3 and 4 nodes too.

The following is a graph representing the computational speedup:

Software Installed

- NFS[Link 3] (Network File System)- To grant access to files on the network from any of the configured computers

- OpenSSH[Link 4] (Secure Shell) - For remote access to any one of the computers.

- build-essential[Link 5] - Debian package containing essential compilers and programming libraries.

- gfortran[Link 6] - GNU Fortran Compiler

- The MPICH2[Link 7] MPI library for parallel programming. MPI (Message-Passing Interface) is a parallel programming standard which formalizes the "best practices" of the parallel programming community.

All the above software are free software in the sense of freedom and cost as well. They may be downloaded from the linked websites or from the package management system of the Linux distribution used.

Parallel Programming

Since it had been decided to go with a parallel-programming based solution (parallelKnoppix), parallel programming had to be learnt by us.

Traditionally, on a single computer, a computer executes one instruction at a time, sequentially. But in a parallel context, an instruction is split into multiple tasks, each of which execute simultaneously on different machines. This, obviously, speeds up the execution of the problem. For problems with very large sets of data, this can be very significant.

Parallel programming is not a cure-all solution, as some problems are very difficult to parallelize. Even so, there are a large number of problems that are both easy and useful to parallelize.

Another problem with parallel programming is that there are limits to the gains one gets from parallelizaion. One of these is Amdahl's Law, which states that the improvement experienced by parallelizing a program is limited by the sequential portion of the program.

A third problem is parallel slowdown. It is possible that the overhead of network communication becomes a bottleneck in the system, causing the overall system to become slower after parallelization instead of becoming faster. Careful thought is needed to lessen the impact of this problem.

Despite all these challenges, parallel porgramming is deployed in a number of situations and fields, ranging from particle physics (e.g. - the Large Hadron Collider) to Economics (e.g. - The NSE's Parallel Risk Management Software for calculation of value at risk of shares) to internet search (e.g - Google uses many clusters).

Choosing a Parallel Programming Solution

Our first challenge was deciding which parallel programming solution to use. Our decisions per each option were:

- MPI (Message Passing Interface)[Link 8]: We chose this as it was widely supported, well-documented and could be used to apply to a wide range of problems

- openMP[Link 9]: we decided not to use openMP because the range of problems to which it is suited is limited

- Hadoop[Link 10]: while this product scales much better than MPI, it is very data parallel, and cannot handle task parallelism well. Moreover, Hadoop is slower than MPI due to its reliance on files for communication[Link 11].

MPI is a standard that facilitates a "message passing" model of parallel programming: each machines works in its own main memory, and the machines communicate with each other by passing messages, through the network. To facilitate this type of programming, the MPI standard was drafted in 1994. It codified "best practises" already in use in the parallel programming community. This standard has many implementations, principal amongst which are LAM/MPI, MPICH2 and OpenMPI. The standard is language agnostic, and there are implementations for various languages, like C, Python and Java.

How MPI works

- 'Communicators' define which collection of processes may communicate with each other

- Every process in a communicator has a unique rank

- The size of the communicator is the total no. of processes in the communicator

Application

Having chosen to use MPI, we had to go about learning to use it. To start off, we tried some simple programs like:

- Monte-Carlo "Dartboard" algorithm for calculating the value of pi

- Counting the number of occurrences of a word in a file

- Matrix Multiplication, Addition etc

Further Work

- Expanding the existing cluster to more nodes. (A 10-node cluster seems plausible)

- Adding a Graphical User Interface to some parallel programming problem, enabling non-programmers to use the cluster

- Producing a parallel programming based project on a bigger scale: We intend to create a more sophisticated system based on application of parallel programming techniques to a real-world problem.

- Testing out other tools for parallel programming, like Hadoop and openMP

- Trying other clustering types (eg: High-Availability etc)

Our Take

Our experience in this cluster project has been a fruitful, if at times frustrating one. On the one hand, it gave us a great opportunity to learn something outside the classroom. On the other, it also exposed our shortcomings with respect to organization of such a large project. Either way it was a very educational experience, one which taught us lessons we will not forget for years to come.

Links

- ↑ ANDC cluster presentation by Ankit Bhattacharjee and Animesh Kumar - http://www.slideshare.net/sudhang/the-andc-cluster-presentation

- ↑ Parallel Programming Presentation by Sudhang Shankar - http://www.slideshare.net/sudhang/parallel-programming-on-the-andc-cluster-presentation

- ↑ NFS - http://nfs.sourceforge.net/

- ↑ OpenSSH - http://www.openssh.org/

- ↑ build-essential - http://packages.debian.org/sid/build-essential

- ↑ gfortran - http://gcc.gnu.org/wiki/GFortran

- ↑ MPICH2 - http://www.mcs.anl.gov/research/projects/mpich2/

- ↑ MPI - http://www.mcs.anl.gov/research/projects/mpi/

- ↑ openMP - http://openmp.org/wp/

- ↑ Hadoop - http://hadoop.apache.org/core/

- ↑ MPI vs Hadoop graph - http://jaliyacgl.blogspot.com/2008/07/july-2nd-report.html

References

- Quinn, Michael J. - Parallel Programming in C with MPI and OpenMP, Tata McGraw-Hill Edition - Preview on Amazon or Google Books

- Alemi, Omid - Community Ubuntu Documentation - https://help.ubuntu.com/community/MpichCluster

- Training Materials from the Livermore Computing Centre section of the Lawrence Livermore National Laboratory website https://computing.llnl.gov/tutorials/

- MPICH2 Documentation - http://www.mcs.anl.gov/research/projects/mpich2/documentation/index.php?s=docs